我的Bilibili频道:香芋派Taro

我的个人博客:taropie0224.github.io

我的公众号:香芋派的烘焙坊

我的音频技术交流群:1136403177

我的个人微信:JazzyTaroPie

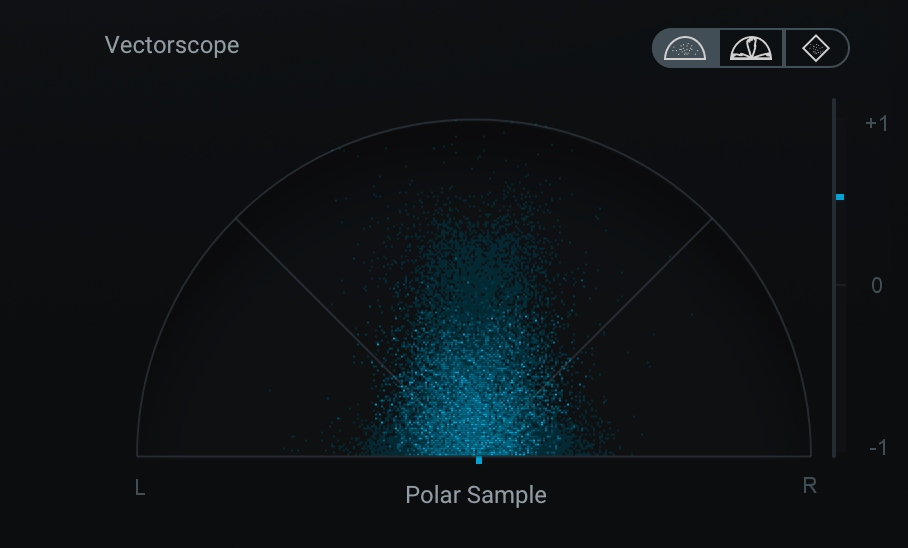

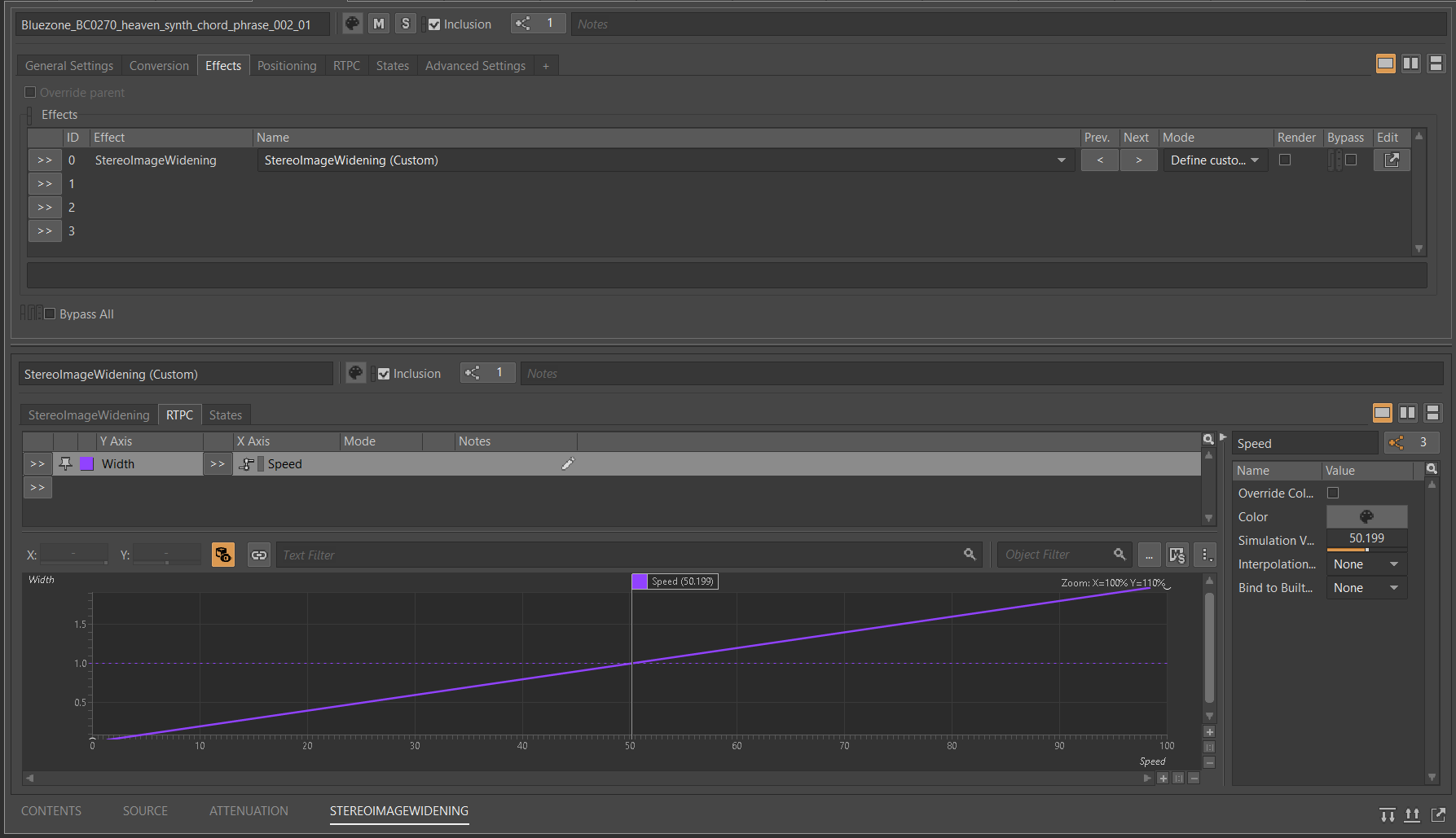

Stereo Image Widening

Github:https://github.com/TaroPie0224/TaroStereoWidth

立体声扩展基于mid-side processing,如果为了让信号听起来更宽,side通道会相对于mid通道增强,如果为了让信号听起来更窄,mid通道会相对于side通道增强。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| // 首先我们需要获得左右两个声道,分别为left和right

// Encoding,通过左右声道得到sides和mid

sides = (1/2) * (left - right);

mid = (1/2) * (left + right);

// Processing,width的范围是0~2

width = 1;

sides = width * sides;

mid = (2 - width) * mid;

// Decoding

newLeft = mid + sides;

newRight = mid - sides;

|

下面给出JUCE中processBlock中的dsp部分代码

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

| void TaroStereoWidthAudioProcessor::processBlock (juce::AudioBuffer<float>& buffer, juce::MidiBuffer& midiMessages)

{

juce::ScopedNoDenormals noDenormals;

auto totalNumInputChannels = getTotalNumInputChannels();

auto totalNumOutputChannels = getTotalNumOutputChannels();

auto currentGain = apvts.getRawParameterValue("Gain")->load();

auto currentWidth = apvts.getRawParameterValue("Width")->load();

for (auto i = totalNumInputChannels; i < totalNumOutputChannels; ++i)

buffer.clear (i, 0, buffer.getNumSamples());

juce::dsp::AudioBlock<float> block {buffer};

for (int sample = 0; sample < block.getNumSamples(); ++sample)

{

auto* channelDataLeft = buffer.getWritePointer(0);

auto* channelDataRight = buffer.getWritePointer(1);

auto sides = 0.5 * (channelDataLeft[sample] - channelDataRight[sample]);

auto mid = 0.5 * (channelDataLeft[sample] + channelDataRight[sample]);

sides = currentWidth * sides;

mid = (2 - currentWidth) * mid;

channelDataLeft[sample] = (mid + sides) * currentGain;

channelDataRight[sample] = (mid - sides) * currentGain;

}

}

|